Dear entrepreneurs and managers, today we will address a crucial topic for your company’s online success: the crawl budget.

This seemingly technical concept has a direct impact on the visibility of your website and, consequently, on your digital business.

Clearly you do not have to perform the activities listed in this article however, speaking from Entrepreneur to Entrepreneur, if we do not decide to do an activity, it is unlikely that anyone in the Company will take it on.

I recommend delegating your IT manager or Web Master otherwise, if you don’t have anyone who can do it for you, you can always delegate this important task to our SEO Specialist Team.

If you are still not convinced whether to carry out this activity or not, let me draw a parallel for you.

Managing the Crawl Budget is like managing the advertising budget of a campaign.

Imagine making an incredible Commercial, or having made crazy ADVs, and then finding out that you have no Budget to disseminate them.

Here … is like making a beautiful website or eCommerce and then wasting Budget to index it on Google.

And now we get to the heart of the matter.

Crawl budget what it is and why you should care

The crawl budget represents the time and resources Google devotes to crawling your website through its robot, Googlebot.

In practical terms, it is the number of pages Google can and will scan on your site in a given period.

For you entrepreneurs, this means that the crawl budget determines how quickly and completely Google “reads” and indexes your site’s content.

An optimized crawl budget can make the difference between being visible or invisible in your potential customers’ searches.

The Impact of the SEO crawl budget on your strategy

In the context of SEO Strategies, crawl budget plays a crucial role.

It does not directly affect the ranking of your pages, but it does determine how many and which pages are crawled by Google.

If crawling resources are wasted on duplicate, low-quality or irrelevant pages, the most strategic pages may be left out of Google’s index.

It has a significant indirect effect on your SEO:

- Indexing speed: A good crawl budget allows Google to quickly discover and index your new content or products.

- Site coverage: Ensures that all important pages on your site are scanned regularly.

- Resource efficiency: Prevents Google from wasting time on irrelevant pages, focusing on those that generate value for your business.

For complex sites such as editorial portals or large e-commerce sites with thousands of product pages, managing the crawl budget is critical. For example:

- New product sheets must be scanned quickly to be visible to users.

- Outdated or duplicate pages risk consuming valuable resources.

Who should worry about the Crawl Budget?

I recommend that you read this article in depth if you manage:

- A large site (over 1 million unique pages)

- An e-commerce with thousands of products

- A portal with frequently changing content

Then crawl budget optimization should be your priority.

Crawling Google budget: how to optimize it for your business

Optimizing the crawl budget means making sure that Google devotes its resources to the pages that really matter to your business.

Here are some practical strategies:

1. Improve the speed of your site

A fast site allows Googlebot to scan more pages in less time. Invest in:

- Image optimization

- Efficient caching

- Quality hosting

2. Strategically structuring your site

A clear and logical structure helps Googlebot navigate effectively:

- Create an intuitive page hierarchy

- Use an updated XML sitemap

- Implement an effective internal link strategy

3. Managing duplicate content

Duplicate content is a waste of crawl budget:

- Consolidate pages with similar content

- Use canonical tags to indicate the preferred version of a page

- Avoid creating duplicate URLs for the same content

Beware duplicate content also negatively impacts SEO ranking for Google does not know which content to make visible.

In addition, duplicate content could confuse your users.

4. Optimize the robots.txt file

The robots.txt file is your tool for guiding Googlebot:

- Block access to unnecessary pages (e.g., administrative area)

- Clearly indicate which sections of the site are important

- Update this file regularly as needed for your site

Most CMSs and eCommerce systems automatically handle the robots.txt file.

However, every now and then I recommend you do a general check.

5. Monitor and correct errors

Server errors can waste the crawl budget:

- Check Google Search Console regularly for crawl errors

- Promptly correct 404 errors and other technical problems

- Implement 301 redirects for moved pages

I also recommend using Programs like SemRush that allow you to schedule scans of your website or eCommerce, and get detailed Reports of everything you need to fix.

6. Managing JavaScript resources

If your site uses a lot of JavaScript:

- Make sure important content is accessible without JavaScript

- Use server-side rendering whenever possible

- Test how Googlebot sees your site with the “View as Googlebot” tool in Search Console

Monitoring and measuring crawl budget search console

To effectively optimize the crawl budget, you must monitor it. Here’s how:

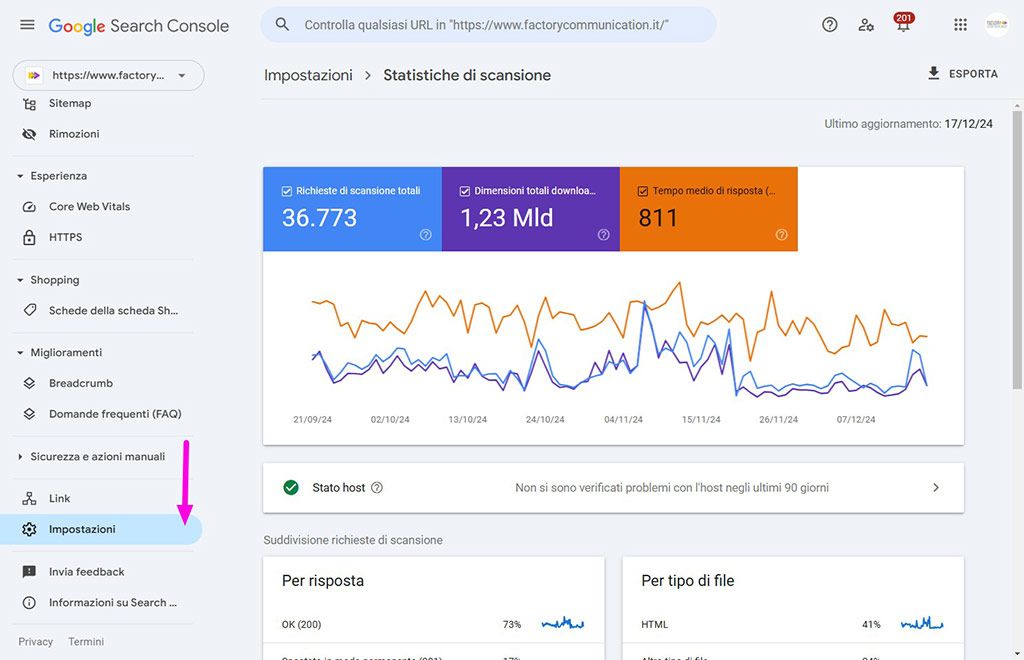

- Use Google Search Console:

- Check the “Scan Statistics” report.

- Monitor scan rate and download times

- Analyze the Server Logs:

- Check how many and which pages Googlebot is scanning

- Identify any patterns or anomalies in the scan

- Advanced SEO Tools:

- Use tools such as SEOZoom or SEMRush for more in-depth analysis

- Monitor changes in your crawl budget over time

Budget crawl: how to identify the pages on the site that are most in need of being crawled

To identify the pages on your site that are most in need of scanning, you can follow these steps:

Analyze server logs

Examine your web server logs to see which pages are scanned less frequently by Googlebot. Pages with less frequent scans may need more attention.

Use Google Search Console

The Search Console offers several useful tools:

- Check the “Scan Statistics” report to identify pages with scan errors or slow response times.

- Use the “URL Inspection” tool to check the indexing status of individual pages.

- Review the “Coverage” section to find pages with errors or excluded from the index.

Analyze the structure of the site

It identifies the pages that are:

- Deeply nested in the structure of the site

- Connected by a few internal links

- Not included in XML sitemap

These pages may have difficulty being discovered and scanned regularly.

Check page performance

Pages with slow load times can consume more crawl budget. Use tools such as PageSpeed Insights to identify and optimize these pages.

Identifies important but outdated content

To optimize your scanning budget, look for pages with important content that are not frequently updated. These could benefit from more regular scanning.

Use advanced SEO tools

Tools such as Ahrefs Site Audit can help you:

- Finding pages with unintentional noindex tags

- Identify pages not included in the sitemap

- Discovering pages with few internal links

Monitor new pages

Pay special attention to recently created pages, making sure they are easily reachable and included in the sitemap.

Google crawl budget: what indicators to use to determine page crawl priority

To determine the scanning priority of the pages on your site, you can use several key indicators. Here are the main ones:

Engagement metrics

- Time spent on the page

- Scroll Depth

- Conversion rate

Analyze this data through tools such as Google Analytics to identify the pages that generate the most user engagement.

Quality and relevance of content

- Originality and value for the user

- Presence of relevant keywords

- Semantic coherence

Pages with high-quality content relevant to user queries should be given priority for crawling.

Site structure and internal links

- Position in the site hierarchy

- Number of internal links pointing to the page

- Presence in the main navigation menus

Pages linked frequently and placed high in the site structure are considered more important.

This activity consists of increasing the number of internal links to your website.

If your site or e-commerce was made with WordPress you can see the number of backlinks for each individual page.

otherwise you can rely on scans made with SEMrush.

In addition, there are special Plugins that take care of analyzing your content and suggest the most internal links.

External signals

- Domain authority

- Quality and quantity of backlinks

- Popularity on social media

Pages with strong external signals indicate greater relevance to users.

Here you can envisage a Link Building strategy to increase the notoriety of your most important pages.

Update frequency

- Date of last update

- Regularity of updates

Frequently updated pages require more frequent scans.

Directions in the XML sitemap

- Priority assigned in the sitemap (tag

) - Stated frequency of change (tag

)

Use these tags to directly tell Google the relative importance of pages.

By monitoring and optimizing these indicators, you can positively influence the crawling priority of your most important pages, ensuring that they receive the attention they need from search engines.

What are the common mistakes that can reduce the crawl budget

There are several common mistakes that can significantly reduce a website’s crawl budget. Here are the main ones:

Duplicate content

Duplicate content is one of the most frequent problems that negatively affect the crawl budget.

Having the same content on multiple pages confuses search engines about which version to index, leading to wasted crawling resources.

To solve this problem, it is advisable:

- Use canonical tags to indicate the main version of a page

- Consolidate similar content

- Remove or redirect duplicate pages

Server errors and pages not found

Frequent server errors or pages not found (404) can significantly decrease the crawl budget.

A stable and reliable site with few errors is more attractive to Google crawlers.

It is important:

- Regularly monitor and correct server errors

- Resolve technical problems in a timely manner

- Implement 301 redirects for removed pages

Poorly optimized site structure

Confusing or poorly optimized site structure can lead to wasted crawl budget.

To improve this aspect:

- Ensure that the site has a logical and easily navigable structure

- Use a clear hierarchy in categories and subpages

- Implement breadcrumbs and sitemaps to help crawlers understand site structure

Overuse of JavaScript

Excessive use of JavaScript can slow down site crawling and indexing, reducing the efficiency of the crawl budget.

To optimize:

- Limit the use of JavaScript where possible

- Using server-side rendering for critical content

- Ensure that the main content is accessible even without JavaScript

High loading times

Pages with high load times or that go into timeout have a negative impact on the crawl budget.

This indicates to search engines that the site cannot handle queries effectively. To improve:

- Optimize page loading speed

- Improve server performance

- Reduce response times to less than 1 second, if possible

Redundant URL parameters

URLs with redundant parameters can unnecessarily consume the crawl budget.

To avoid this problem:

- Remove or consolidate unnecessary URL parameters

- Using the URL parameter management function in Google Search Console

- Implement canonicalization for similar URL versions

By correcting these common errors, you can significantly optimize your site’s crawl budget, allowing search engines to focus on the most important and relevant pages.

Conclusions: crawl budget optimization as a strategic asset

For you entrepreneurs and managers, the crawl budget is not just a technical concept, but a real strategic asset. Optimizing it means:

- Increased Visibility: Your new products or content will be indexed more quickly.

- Resource Efficiency: You will focus Google’s attention on pages that generate value for your business.

- Competitive Advantage: A site that is well optimized for crawling can outperform competitors in SERPs.

Investing time and resources in crawl budget optimization may seem like a technical activity, but the results translate directly into business opportunities.

In an increasingly competitive digital marketplace, every advantage counts. Make sure your site is making the most of the resources Google devotes to it.

Remember: a well-managed crawl budget is the first step toward a more visible, efficient and high-performing website. Do not underestimate this crucial aspect of your online presence.

Want to learn more about website development, read also: